Visualizing the gradient descent method

Por um escritor misterioso

Last updated 30 maio 2024

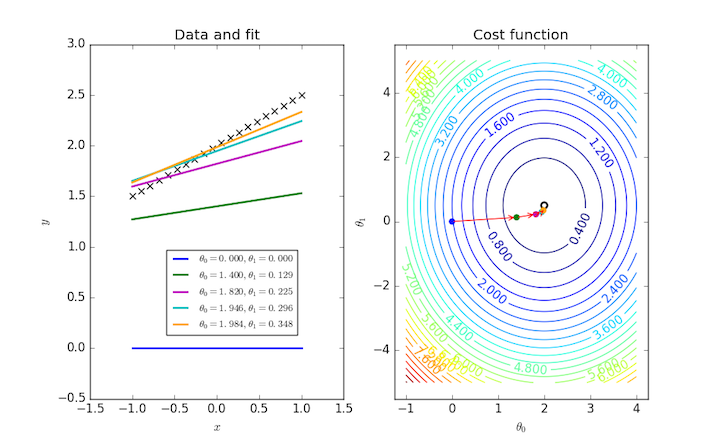

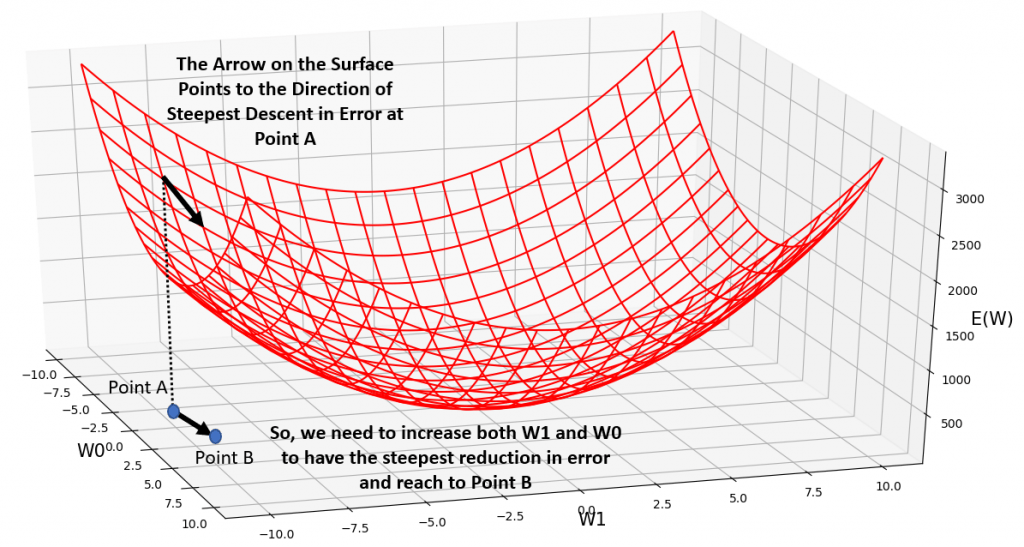

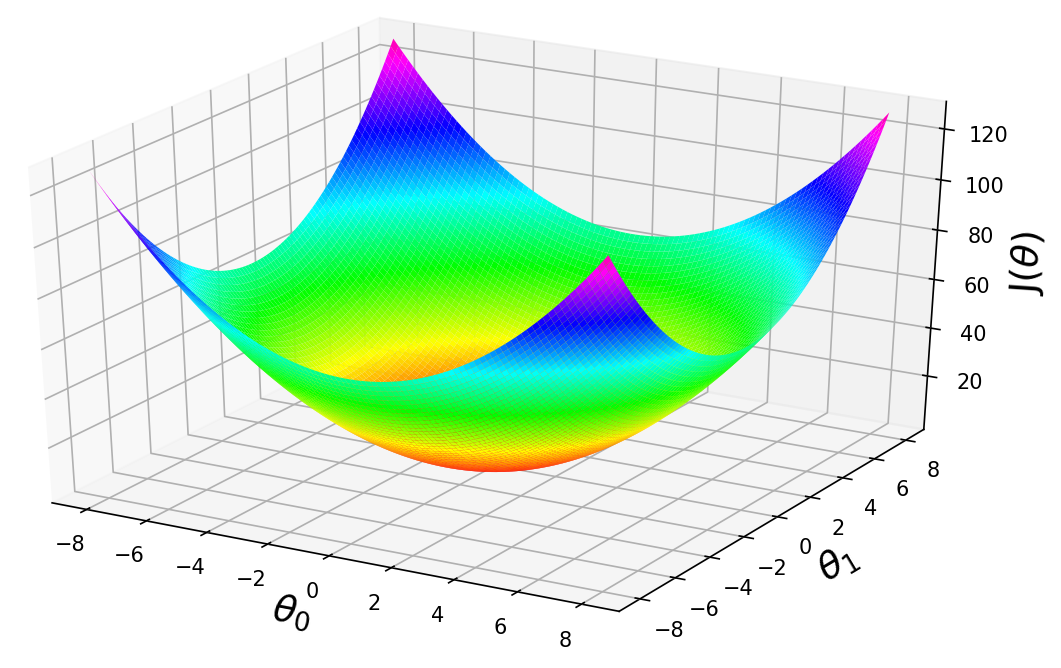

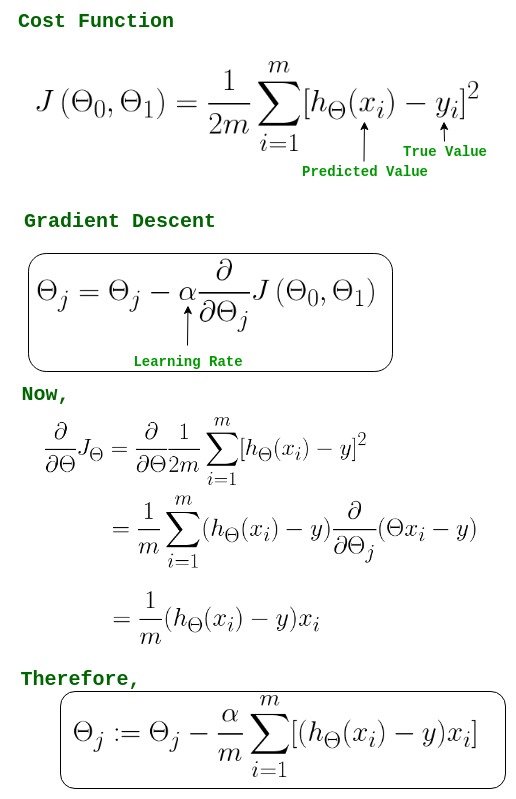

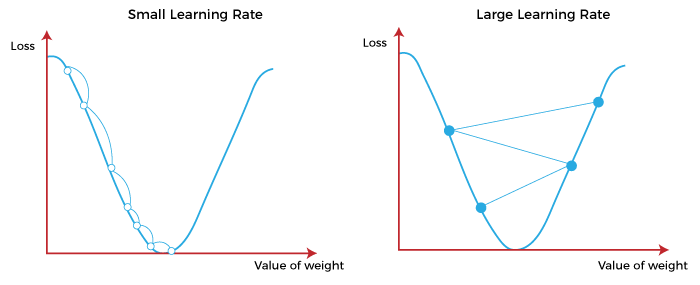

In the gradient descent method of optimization, a hypothesis function, $h_\boldsymbol{\theta}(x)$, is fitted to a data set, $(x^{(i)}, y^{(i)})$ ($i=1,2,\cdots,m$) by minimizing an associated cost function, $J(\boldsymbol{\theta})$ in terms of the parameters $\boldsymbol\theta = \theta_0, \theta_1, \cdots$. The cost function describes how closely the hypothesis fits the data for a given choice of $\boldsymbol \theta$.

Subgradient Descent Explained, Step by Step

How to visualize Gradient Descent using Contour plot in Python

Deriving the Gradient Descent Rule (PART-1)

Variance Reduction Methods

Guide to Gradient Descent Algorithm: A Comprehensive implementation in Python - Machine Learning Space

Reducing Loss: Gradient Descent, Machine Learning

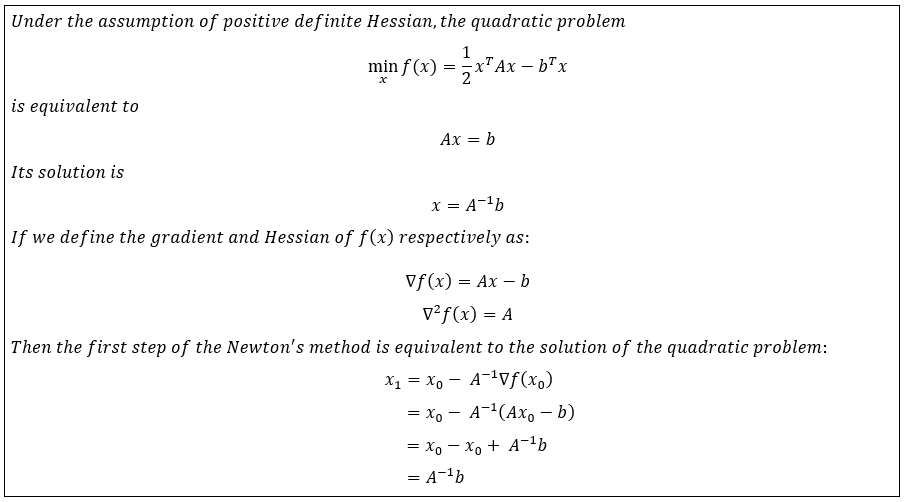

Visualizing Newton's Method for Optimization II

From Mystery to Mastery: How Gradient Descent is Reshaping Our World

A Data Scientist's Guide to Gradient Descent and Backpropagation Algorithms

Gradient Descent animation: 1. Simple linear Regression, by Tobias Roeschl

Neural networks and deep learning

Gradient Descent from scratch and visualization

Visualization example of gradient descent algorithm to converge on

Recomendado para você

-

Mod-06 Lec-13 Steepest Descent Method30 maio 2024

Mod-06 Lec-13 Steepest Descent Method30 maio 2024 -

Steepest Descent Method - an overview30 maio 2024

Steepest Descent Method - an overview30 maio 2024 -

Steepest Descent and Newton's Method in Python, from Scratch: A Comparison, by Nicolo Cosimo Albanese30 maio 2024

Steepest Descent and Newton's Method in Python, from Scratch: A Comparison, by Nicolo Cosimo Albanese30 maio 2024 -

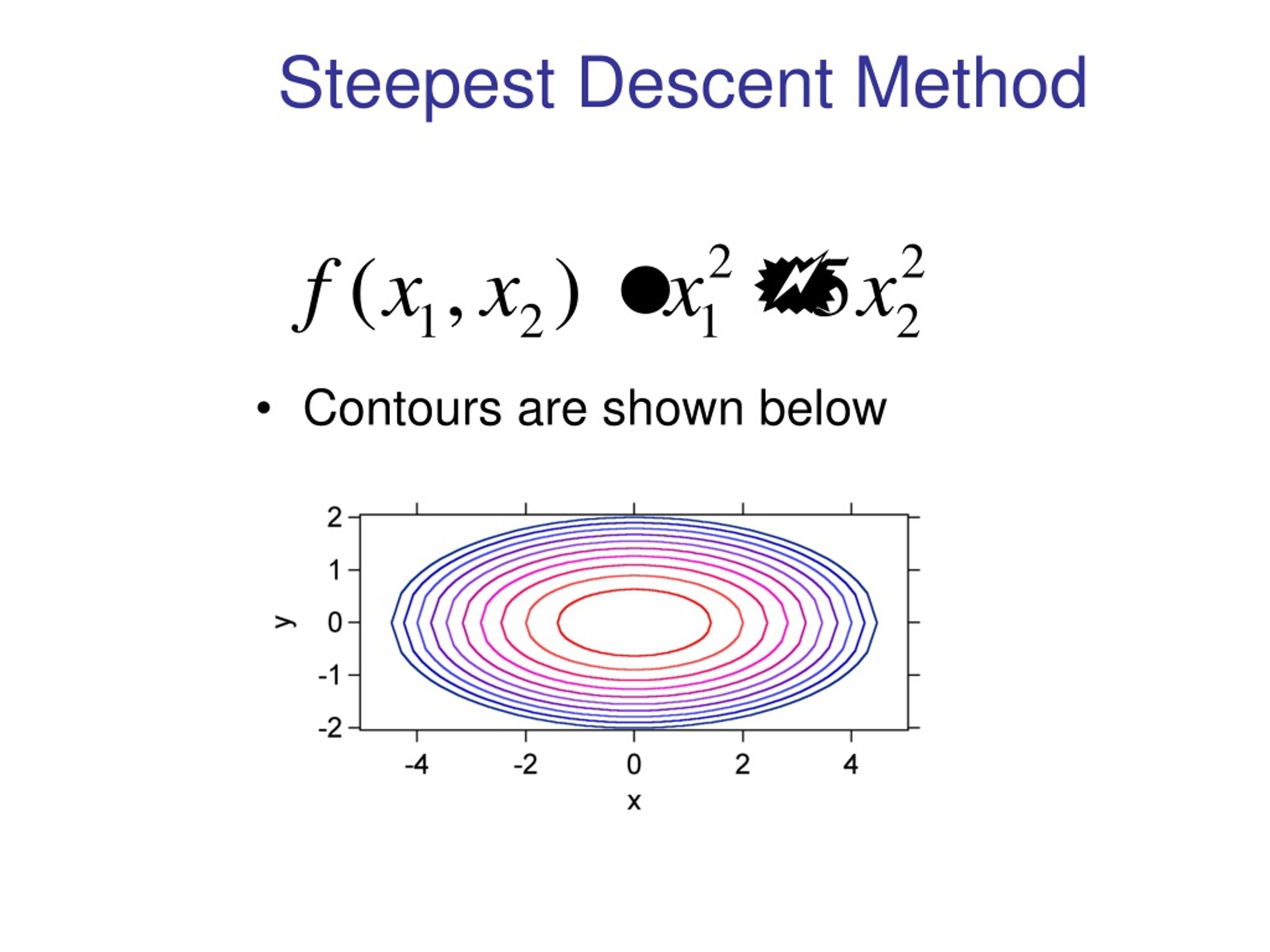

PPT - Steepest Descent Method PowerPoint Presentation, free download - ID:921260530 maio 2024

PPT - Steepest Descent Method PowerPoint Presentation, free download - ID:921260530 maio 2024 -

Gradient Descent in Linear Regression - GeeksforGeeks30 maio 2024

Gradient Descent in Linear Regression - GeeksforGeeks30 maio 2024 -

Curves of Steepest Descent for 3D Functions - Wolfram Demonstrations Project30 maio 2024

Curves of Steepest Descent for 3D Functions - Wolfram Demonstrations Project30 maio 2024 -

Chapter 4 Line Search Descent Methods Introduction to Mathematical Optimization30 maio 2024

Chapter 4 Line Search Descent Methods Introduction to Mathematical Optimization30 maio 2024 -

![PDF] The Method of Steepest Descent for Feedforward Artificial Neural Networks](https://d3i71xaburhd42.cloudfront.net/3186154812793657dbd65c49a192358707006880/1-Figure1-1.png) PDF] The Method of Steepest Descent for Feedforward Artificial Neural Networks30 maio 2024

PDF] The Method of Steepest Descent for Feedforward Artificial Neural Networks30 maio 2024 -

example of steepest Descent (left) and Conjugate Gradient (right)30 maio 2024

example of steepest Descent (left) and Conjugate Gradient (right)30 maio 2024 -

Gradient Descent in Machine Learning - Javatpoint30 maio 2024

Gradient Descent in Machine Learning - Javatpoint30 maio 2024

você pode gostar

-

Cartas Pokemon Para Imprimir Raichu, Raichu pokemon card, Old pokemon cards30 maio 2024

Cartas Pokemon Para Imprimir Raichu, Raichu pokemon card, Old pokemon cards30 maio 2024 -

desenho de personagem cacto fofo mascote 4217875 Vetor no Vecteezy30 maio 2024

desenho de personagem cacto fofo mascote 4217875 Vetor no Vecteezy30 maio 2024 -

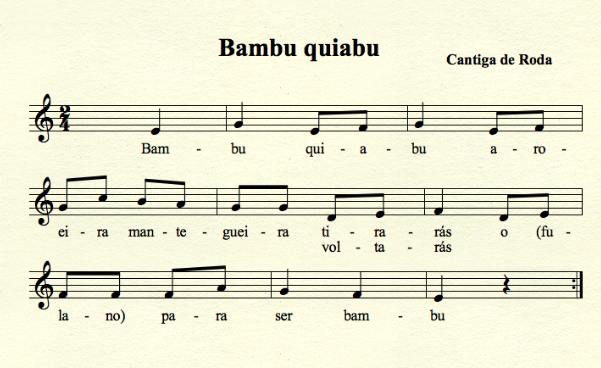

Portal do Professor - Música - Jogos de Mãos: resgate, ampliação e composição – aula 930 maio 2024

Portal do Professor - Música - Jogos de Mãos: resgate, ampliação e composição – aula 930 maio 2024 -

Antigo moinho de vento de madeira em um dia ensolarado. antigo moinho holandês tradicional.30 maio 2024

Antigo moinho de vento de madeira em um dia ensolarado. antigo moinho holandês tradicional.30 maio 2024 -

Jogos similares a Aviator - AviatorsWS30 maio 2024

Jogos similares a Aviator - AviatorsWS30 maio 2024 -

Final de temporada de 'Miraculous' terá estreia mundial no Brasil - 10/03/2022 - Zapping - Cristina Padiglione - F530 maio 2024

Final de temporada de 'Miraculous' terá estreia mundial no Brasil - 10/03/2022 - Zapping - Cristina Padiglione - F530 maio 2024 -

fantasia bob esponja em Promoção na Shopee Brasil 202330 maio 2024

-

Jogo Crash Bandicoot 4 It's About Time - PS4 em Promoção na Americanas30 maio 2024

Jogo Crash Bandicoot 4 It's About Time - PS4 em Promoção na Americanas30 maio 2024 -

Dinolandia : The first dinosaur anime ever made, Fan Fiction30 maio 2024

Dinolandia : The first dinosaur anime ever made, Fan Fiction30 maio 2024 -

The Story of Blunder - Fred Penner30 maio 2024

The Story of Blunder - Fred Penner30 maio 2024